Meta’s latest creation, the Ray-Ban Display smart glasses, has tech fans buzzing after its big reveal at Meta Connect 2025 on September 17. Priced at $799, these glasses hit U.S. stores on September 30, marking Meta’s first swing at consumer-ready augmented reality (AR) glasses with a built-in display. Far from just a souped-up version of the Ray-Ban Meta line, these glasses blend cutting-edge AI with iconic style, aiming to make AR a seamless part of daily life.

Here’s why they’re turning heads and what they mean for the future.

A Cool Twist on Smart Tech

Building on the success of the Ray-Ban Meta glasses—which sold over 2 million pairs since 2023—the Ray-Ban Display keeps the classic Wayfarer vibe but packs a serious tech punch. A full-color micro-LED display in the right lens delivers crisp visuals (600×600 resolution, 20-degree field of view, up to 5,000 nits brightness) for everything from texts to maps, all without blocking your view. The display pops up when you need it and vanishes when you don’t, keeping things sleek and unobtrusive.

These glasses are a bit chunkier than standard Ray-Bans to house the tech, but they still look sharp with transition lenses that adjust to sunlight. They come equipped with a 12MP camera for photos and 3K video, open-ear speakers for immersive sound, and multiple mics for crystal-clear calls.

With all-day battery life and a charging case, they’re built for real-world use. You can try them at select retailers like Best Buy, LensCrafters, Sunglass Hut, or Ray-Ban stores (Verizon joins soon), but online orders aren’t available yet—Meta wants you to experience them in person.

The Neural Band: Control with a Flick of the Wrist

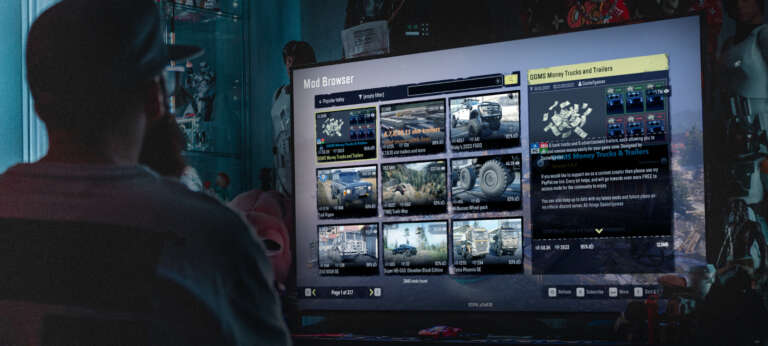

What makes these glasses stand out is the optional Meta Neural Band, a $199 add-on that’s like something out of a sci-fi flick. This lightweight wristband uses electromyography (EMG) to read tiny muscle movements, letting you control the glasses with subtle gestures—like “writing” texts in the air or swiping to navigate menus.

Mark Zuckerberg showed it off at Connect, calling it a game-changer for making tech feel intuitive, almost like an extension of yourself. With just 2% light leakage, the display stays private, easing concerns about prying eyes. As one X user gushed, “Meta’s Ray-Ban Display feels like the first real smart glasses.”

AI That Enhances Your Day

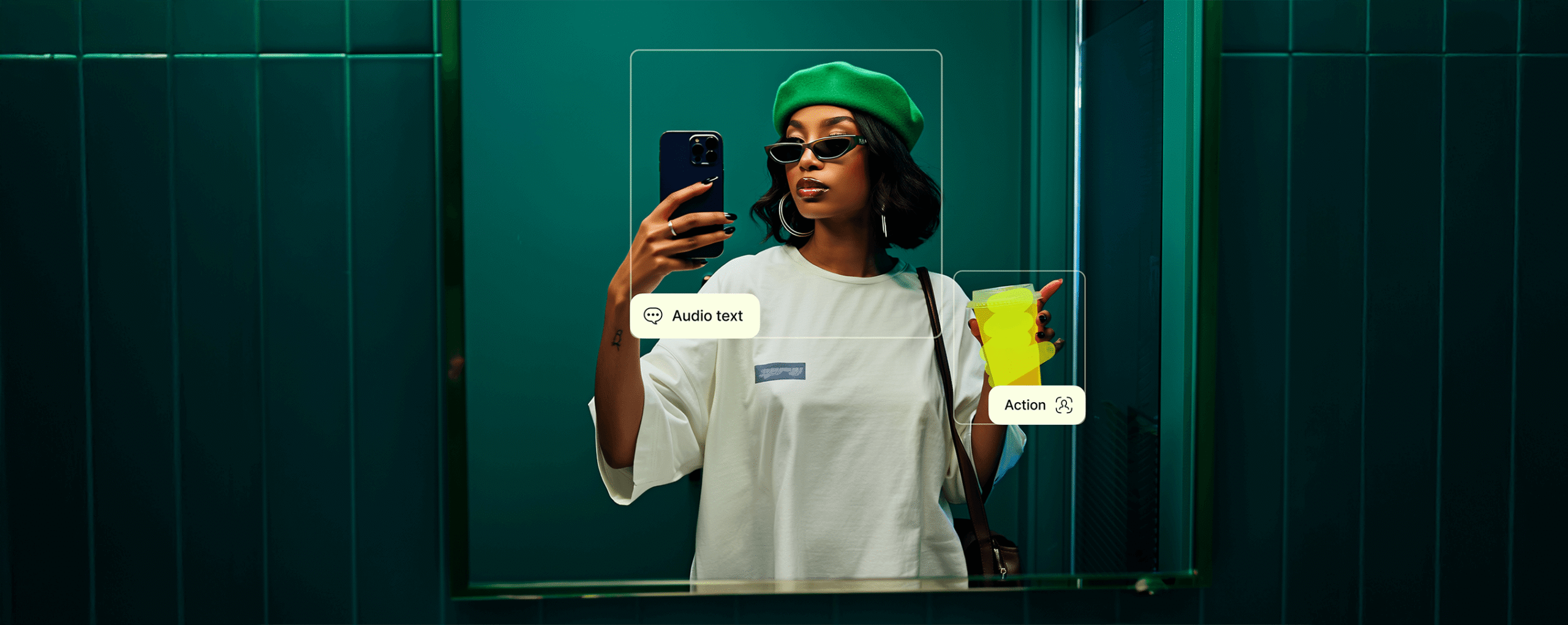

Powered by Meta AI, the Ray-Ban Display glasses are designed to keep you in the moment. The lens shows real-time translations for conversations, visual guides (like recipe steps or landmark details), and private notifications from WhatsApp, Messenger, or Instagram.

You can make video calls with screen sharing, follow turn-by-turn navigation (in beta for select cities), or use the camera for object recognition—perfect for travellers and multitaskers. Zuckerberg pitched them as the “ideal form factor for AI,” warning that skipping this tech could leave you at a “cognitive disadvantage.”

X users are already raving. One called the translation feature “mind-blowing” for global travel, while another highlighted how the glasses could help visually impaired users by describing surroundings or reading text aloud. It’s not just about convenience—these glasses could make tech more inclusive.

When the Demo Went Sideways — Twice

Meta promised sleek AI magic. What they delivered live? Two very public flubs.

1. The Cooking Demo That Cooked Itself

They had a chef on stage giving the Ray-Ban glasses a test: “Help me make a Korean-inspired steak sauce.” Instead of walking through step by step, the AI jumped ahead, telling him he’d already combined ingredients—even though nothing was in the bowl. Multiple tries later, the AI kept repeating the same wrong advice.

Bosworth later admitted: when the chef said “Hey Meta, start Live AI”, every glass in the room activated. Because they’d routed all those calls into one development server, the system got overwhelmed. Essentially, they DDoS’d themselves.

2. The Ghost Call That Never Rang

Moments later, Zuckerberg tried the wristband-controlled video call demo. The sound rang for everyone…but the display never showed it. He made gesture after gesture—nothing.

Why? A “never-before-seen bug” caused the display to be asleep at the very moment the call notification came in, so the system never showed the UI, even when “woken.” Bosworth called it a “terrible place for that bug to show up.”

Hurdles and Hype

At $799 (or $998 with the Neural Band), the Ray-Ban Display isn’t a budget buy, and early demos had some glitches, raising questions about polish. Privacy is another concern—always-on cameras and AI data collection could spark debates, though Meta’s visible recording indicators aim to ease worries. The single-lens display might also feel odd to new users.

Still, the buzz is undeniable. With Meta’s holographic Orion prototype hinting at what’s next, the Ray-Ban Display feels like a stepping stone to a world where glasses rival smartphones. Will you grab a pair and dive into this AI-powered future, or wait to see how it plays out? Either way, Meta’s making AR stylish—and it’s about to change how we see the world – Literally.

Leave a Comment