In an era where 3D content creation is often bottlenecked by manual labor and expensive scanning equipment, Seed3D 1.0 emerges as a game-changer. Developed by ByteDance’s innovative Seed team, this model leverages advanced AI to automate the process, producing detailed geometry, photorealistic textures, and physically based rendering (PBR) materials—all from just one input image. With applications spanning embodied AI, virtual reality, and e-commerce, it’s no wonder the tech community is buzzing about its potential to scale 3D asset production exponentially.

The Magic of Single-Image 3D Reconstruction

At its core, Seed3D 1.0 simplifies what was once a complex, multi-step workflow. Users upload a single RGB photo—be it a household object like a lamp, a natural element like a tree, or even a complex urban scene—and the model generates a complete 3D representation in seconds. This includes explicit meshes with clean, closed-manifold topology, ensuring compatibility with physics engines without the need for post-processing fixes.

What sets it apart is its focus on simulation readiness. Traditional 3D generation tools often produce visually appealing but structurally flawed models that falter in real-time simulations. Seed3D 1.0, however, incorporates precise edges, chamfers, and thin structures that maintain stability in environments like NVIDIA Isaac Sim. This makes it ideal for training robots or prototyping virtual experiences where physical accuracy is paramount.

Architectural Innovations: Diffusion Transformers and Beyond

Seed3D 1.0 is powered by a Diffusion Transformer (DiT) architecture, a 1.5B-parameter model trained on a massive proprietary dataset of synthetic and real-world 3D scans. The process unfolds in multiple stages:

- Geometry Prediction: The DiT core generates high-resolution meshes, capturing intricate details such as surface normals and curvature for lifelike forms.

- Material and Texture Separation: A specialized branch handles Bidirectional Reflectance Distribution Function (BRDF) decomposition, outputting 6K-resolution texture maps including albedo, metalness, roughness, and specular components. This ensures the models look realistic under varying lighting conditions.

- Scene Composition Pipeline: For more ambitious creations, Seed3D 1.0 uses vision-language models to detect objects, infer spatial relationships, and assemble them into cohesive scenes. Imagine turning a photo of a cluttered desk into a fully navigable 3D office space, complete with interactive elements.

This end-to-end approach not only speeds up generation but also enhances multi-view consistency, reducing artifacts that plague older methods. ByteDance’s large-scale training regimen, involving millions of multi-view renders paired with ground-truth data, allows the model to generalize across diverse categories, from everyday objects to abstract designs.

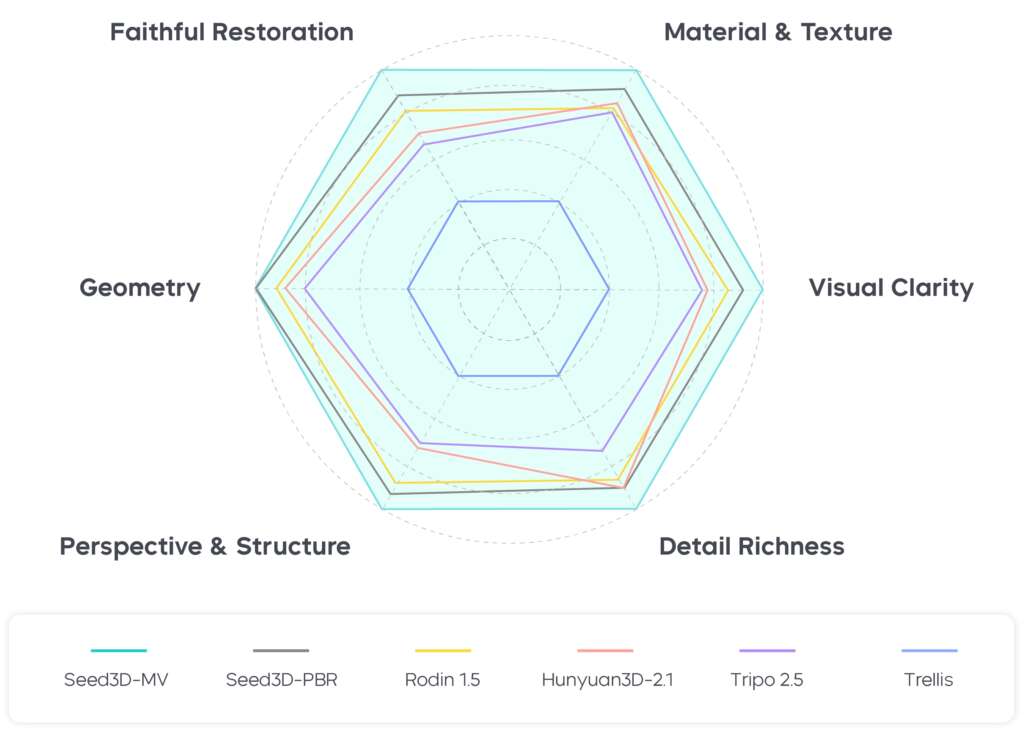

Benchmarking Excellence: Outpacing the Competition

Rigorous evaluations detailed in the model’s arXiv paper highlight its superiority over existing 3B-parameter rivals. Key metrics demonstrate Seed3D 1.0’s edge in fidelity and usability:

- Geometric Accuracy: Lower Chamfer Distance scores indicate better reconstruction of fine features, such as the veins on a leaf or the threads on a screw.

- Texture Quality: Higher PSNR (Peak Signal-to-Noise Ratio) values ensure seamless, photorealistic surfaces without blurring or inconsistencies.

- Simulation Stability: With 98% success in drop-in physics tests, it far exceeds competitors’ 71% average.

Real-World Applications: Transforming Industries

The implications of Seed3D 1.0 extend far beyond tech demos. In robotics, it enables rapid creation of synthetic datasets for training manipulation algorithms, accelerating development in warehouses or autonomous vehicles. Game developers can populate vast open worlds with custom assets, cutting production times and costs.

For XR (extended reality) and virtual production, the model’s PBR outputs integrate seamlessly into tools like Unity or Unreal Engine, allowing filmmakers to prototype sets from location scouts. E-commerce platforms could revolutionize shopping with instant AR previews—snap a product photo, and customers try it in their space.

Even in education and research, Seed3D 1.0 democratises 3D modelling, empowering students and indie creators who lack access to high-end software.

Limitations and Ethical Considerations

No model is perfect. Seed3D 1.0 may struggle with highly occluded or ambiguous inputs, where additional views could improve accuracy. The team acknowledges ongoing work to refine generalization for rare objects or extreme lighting.

Ethically, ByteDance emphasizes responsible use, with built-in safeguards against generating copyrighted or harmful content. As AI blurs the line between real and synthetic, discussions around digital authenticity are crucial.

Leave a Comment