What Is Volumetric Video?

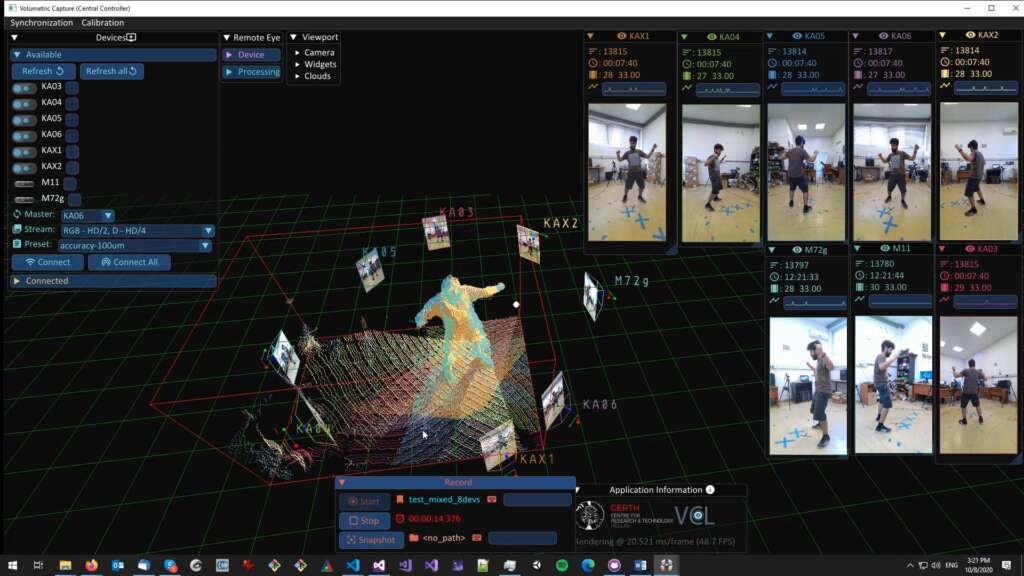

When you see volumetric video tech in action, it looks like super futuristic, but it’s simpler than it seems. It captures real-world people, objects, or scenes in full 3D, letting you view them from any angle—like walking around a living hologram. Unlike flat 2D video, it uses arrays of cameras and sensors to build a dynamic three-dimensional model.

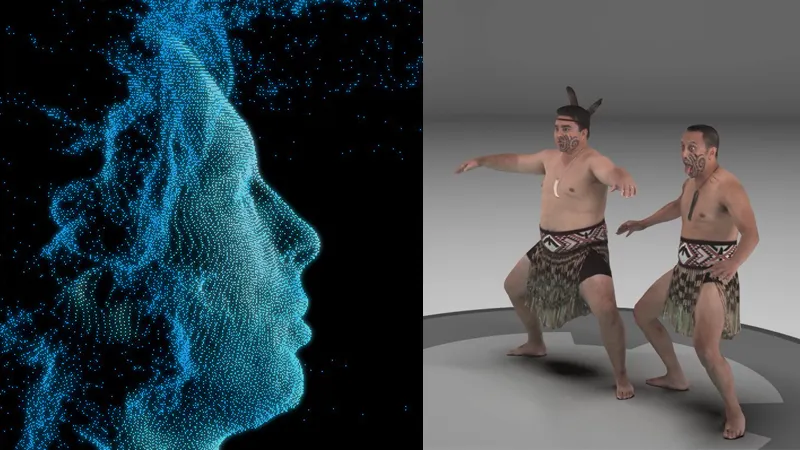

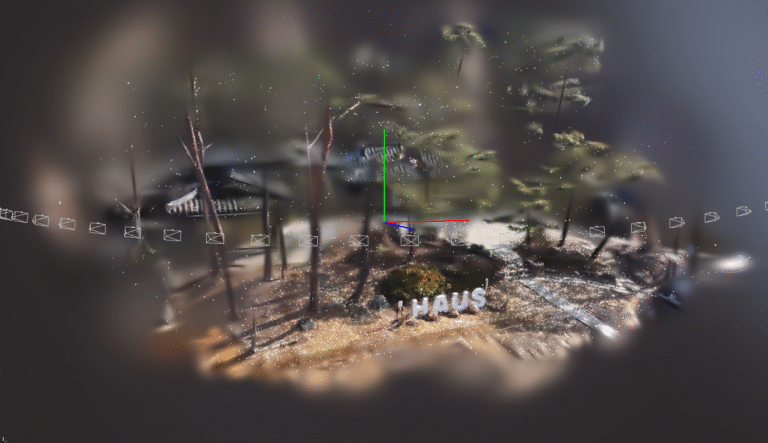

If you read our previous article on Gaussian splatting, you might see some parallels. Gaussian splatting uses point-based rendering to create highly detailed 3D models of static scenes, like a digital snapshot you can explore from any angle. Volumetric video is like the video version of that—capturing dynamic, moving scenes in 3D over time.

While Gaussian splatting excels at frozen moments, volumetric video brings those moments to life, adding motion and real-time interaction for immersive experiences like never before.

The Early Days: How It All Began

Volumetric video kicked off in the early 2000s with researchers experimenting on multicamera setups to reconstruct 3D scenes. It started as a bridge between traditional video and virtual reality, aiming for lifelike representations beyond basic animations. Back then, it was clunky—think bulky studio rigs, sky-high costs, and limited playback.

Early adopters in the 2010s, spurred by devices like Microsoft’s Kinect, used depth-sensing tech to make 3D capture more accessible. By 2017, the focus shifted to dynamic, moving captures, creating hologram-like images of real people for VR and AR.

Fast-forward to 2025, and volumetric video is hitting its stride. At the CVPR conference in June, a tutorial called Volumetric Video in the Real World—hosted by NVIDIA and Google—dug into practical challenges like compressing massive data and fixing ‘viewpoint holes’ (gaps in 3D models when cameras miss angles).

At IBC 2025 in September, Nokia unveiled the first standards-based, real-time volumetric video communication system, built on MPEG’s Immersive Video (MIV) standard and Visual Volumetric Video-based Coding (V3C).

Real-World Uses and Examples

The use cases these days are far and wide, but where it really shines is when immersion matters the most. For concerts, it would give you the ability to pick your viewpoint, or sports replays from any angle.

In 2024, Gracia showcased ultra-realistic 3D captures of people on the Quest 3 VR headset, letting users interact with lifelike models in standalone VR.

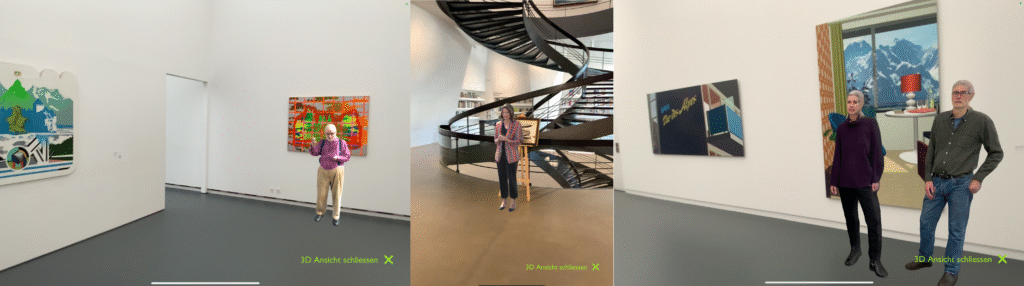

And just like a lot of virtual technologies, it’s being tested in schools to see if it can make a difference. In early 2025, Kent State University and Virginia Tech used software to capture volumetric video to blend multi-angle footage into 3D models.

Museums are on board too—a 2024 ACM study highlighted its use in XR exhibits, integrating real people for interactive storytelling. In film, companies like 4Dviews pushed volumetric tech in April 2025 to capture scenes in 4D (3D plus time) for richer cinema.

Challenges and the Future

It’s not all smooth, though. Volumetric video generates huge data, needing heavy compression. Viewpoint holes remain tricky, and gear costs are still high, though dropping. A March 2025 Frontiers study explored sparse camera setups to make capture more accessible by testing visual fidelity with lighter rigs.

Looking ahead, standards like MIV are setting the stage for everyday use. Soon, volumetric video could upgrade Zoom to 3D chats or make sports broadcasts interactive. As hardware improves and adoption grows, flat screens will feel outdated. Keep an eye on events like IBC for the next big steps.

Leave a Comment